Sum of Convex Continuously Differentiable Functions Does Not Have Minima

Convex function on an interval.

A function (in black) is convex if and only if the region above its graph (in green) is a convex set.

A graph of the bivariate convex function x 2 + xy + y 2 .

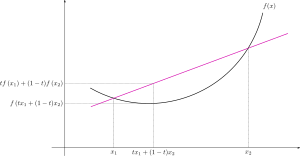

In mathematics, a real-valued function is called convex if the line segment between any two points on the graph of the function lies above the graph between the two points. Equivalently, a function is convex if its epigraph (the set of points on or above the graph of the function) is a convex set. A twice-differentiable function of a single variable is convex if and only if its second derivative is nonnegative on its entire domain.[1] Well-known examples of convex functions of a single variable include the quadratic function and the exponential function . In simple terms, a convex function refers to a function whose graph is shaped like a cup , while a concave function's graph is shaped like a cap .

Convex functions play an important role in many areas of mathematics. They are especially important in the study of optimization problems where they are distinguished by a number of convenient properties. For instance, a strictly convex function on an open set has no more than one minimum. Even in infinite-dimensional spaces, under suitable additional hypotheses, convex functions continue to satisfy such properties and as a result, they are the most well-understood functionals in the calculus of variations. In probability theory, a convex function applied to the expected value of a random variable is always bounded above by the expected value of the convex function of the random variable. This result, known as Jensen's inequality, can be used to deduce inequalities such as the arithmetic–geometric mean inequality and Hölder's inequality.

Definition [edit]

Visualizing a convex function and Jensen's Inequality

Let be a convex subset of a real vector space and let be a function.

Then is called convex if and only if any of the following equivalent conditions hold:

- For all and all : The right hand side represents the straight line between and in the graph of as a function of increasing from to or decreasing from to sweeps this line. Similarly, the argument of the function in the left hand side represents the straight line between and in or the -axis of the graph of So, this condition requires that the straight line between any pair of points on the curve of to be above or just meets the graph.[2]

- For all and all such that : The difference of this second condition with respect to the first condition above is that this condition does not include the intersection points (for example, and ) between the straight line passing through a pair of points on the curve of (the straight line is represented by the right hand side of this condition) and the curve of the first condition includes the intersection points as it becomes or at or or In fact, the intersection points do not need to be considered in a condition of convex usingbecause and are always true (so not useful to be a part of a condition).

The second statement characterizing convex functions that are valued in the real line is also the statement used to define convex functions that are valued in the extended real number line where such a function is allowed to (but is not required to) take as a value. The first statement is not used because it permits to take or as a value, in which case, if or respectively, then would be undefined (because the multiplications and are undefined). The sum is also undefined so a convex extended real-valued function is typically only allowed to take exactly one of and as a value.

The second statement can also be modified to get the definition of strict convexity, where the latter is obtained by replacing with the strict inequality Explicitly, the map is called strictly convex if and only if for all real and all such that :

A strictly convex function is a function that the straight line between any pair of points on the curve is above the curve except for the intersection points between the straight line and the curve.

The function is said to be concave (resp. strictly concave ) if ( multiplied by -1) is convex (resp. strictly convex ).

Alternative naming [edit]

The term convex is often referred to as convex down or concave upward, and the term concave is often referred as concave down or convex upward.[3] [4] [5] If the term "convex" is used without an "up" or "down" keyword, then it refers strictly to a cup shaped graph . As an example, Jensen's inequality refers to an inequality involving a convex or convex-(up), function.[6]

Properties [edit]

Many properties of convex functions have the same simple formulation for functions of many variable as for functions of one variable. See below the properties for the case of many variables, as some of them are not listed for functions of one variable.

Functions of one variable [edit]

- Suppose is a function of one real variable defined on an interval, and let (note that is the slope of the purple line in the above drawing; the function is symmetric in means that does not change by exchanging and ). is convex if and only if is monotonically non-decreasing in for every fixed (or vice versa). This characterization of convexity is quite useful to prove the following results.

- A convex function of one real variable defined on some open interval is continuous on admits left and right derivatives, and these are monotonically non-decreasing. As a consequence, is differentiable at all but at most countably many points, the set on which is not differentiable can however still be dense. If is closed, then may fail to be continuous at the endpoints of (an example is shown in the examples section).

- A differentiable function of one variable is convex on an interval if and only if its derivative is monotonically non-decreasing on that interval. If a function is differentiable and convex then it is also continuously differentiable.

- A differentiable function of one variable is convex on an interval if and only if its graph lies above all of its tangents:[7] : 69 for all and in the interval.

- A twice differentiable function of one variable is convex on an interval if and only if its second derivative is non-negative there; this gives a practical test for convexity. Visually, a twice differentiable convex function "curves up", without any bends the other way (inflection points). If its second derivative is positive at all points then the function is strictly convex, but the converse does not hold. For example, the second derivative of is , which is zero for but is strictly convex.

- If is a convex function of one real variable, and , then is superadditive on the positive reals, that is for positive real numbers and .

Proof

Since is convex, by using one of the convex function definitions above and letting it follows that for all real

From this it follows that

- A function is midpoint convex on an interval if for all This condition is only slightly weaker than convexity. For example, a real-valued Lebesgue measurable function that is midpoint-convex is convex: this is a theorem of Sierpinski.[8] In particular, a continuous function that is midpoint convex will be convex.

Functions of several variables [edit]

- A function valued in the extended real numbers is convex if and only if its epigraph is a convex set.

- A differentiable function defined on a convex domain is convex if and only if holds for all in the domain.

- A twice differentiable function of several variables is convex on a convex set if and only if its Hessian matrix of second partial derivatives is positive semidefinite on the interior of the convex set.

- For a convex function the sublevel sets and with are convex sets. A function that satisfies this property is called a quasiconvex function and may fail to be a convex function.

- Consequently, the set of global minimisers of a convex function is a convex set: - convex.

- Any local minimum of a convex function is also a global minimum. A strictly convex function will have at most one global minimum.[9]

- Jensen's inequality applies to every convex function . If is a random variable taking values in the domain of then where denotes the mathematical expectation. Indeed, convex functions are exactly those that satisfies the hypothesis of Jensen's inequality.

- A first-order homogeneous function of two positive variables and (that is, a function satisfying for all positive real ) that is convex in one variable must be convex in the other variable.[10]

Operations that preserve convexity [edit]

- is concave if and only if is convex.

- If is any real number then is convex if and only if is convex.

- Nonnegative weighted sums:

- if and are all convex, then so is In particular, the sum of two convex functions is convex.

- this property extends to infinite sums, integrals and expected values as well (provided that they exist).

- Elementwise maximum: let be a collection of convex functions. Then is convex. The domain of is the collection of points where the expression is finite. Important special cases:

- If are convex functions then so is

- Danskin's theorem: If is convex in then is convex in even if is not a convex set.

- Composition:

- Minimization: If is convex in then is convex in provided that is a convex set and that

- If is convex, then its perspective with domain is convex.

- A function defined on a vector space is convex and satisfies if and only if for any and any non-negative real numbers that satisfy

Strongly convex functions [edit]

The concept of strong convexity extends and parametrizes the notion of strict convexity. A strongly convex function is also strictly convex, but not vice versa.

A differentiable function is called strongly convex with parameter if the following inequality holds for all points in its domain:[11]

or, more generally,

where is any inner product, and is the corresponding norm. Some authors, such as [12] refer to functions satisfying this inequality as elliptic functions.

An equivalent condition is the following:[13]

It is not necessary for a function to be differentiable in order to be strongly convex. A third definition[13] for a strongly convex function, with parameter is that, for all in the domain and

Notice that this definition approaches the definition for strict convexity as and is identical to the definition of a convex function when Despite this, functions exist that are strictly convex but are not strongly convex for any (see example below).

If the function is twice continuously differentiable, then it is strongly convex with parameter if and only if for all in the domain, where is the identity and is the Hessian matrix, and the inequality means that is positive semi-definite. This is equivalent to requiring that the minimum eigenvalue of be at least for all If the domain is just the real line, then is just the second derivative so the condition becomes . If then this means the Hessian is positive semidefinite (or if the domain is the real line, it means that ), which implies the function is convex, and perhaps strictly convex, but not strongly convex.

Assuming still that the function is twice continuously differentiable, one can show that the lower bound of implies that it is strongly convex. Using Taylor's Theorem there exists

such that

Then

by the assumption about the eigenvalues, and hence we recover the second strong convexity equation above.

A function is strongly convex with parameter m if and only if the function

is convex.

The distinction between convex, strictly convex, and strongly convex can be subtle at first glance. If is twice continuously differentiable and the domain is the real line, then we can characterize it as follows:

For example, let be strictly convex, and suppose there is a sequence of points such that . Even though , the function is not strongly convex because will become arbitrarily small.

A twice continuously differentiable function on a compact domain that satisfies for all is strongly convex. The proof of this statement follows from the extreme value theorem, which states that a continuous function on a compact set has a maximum and minimum.

Strongly convex functions are in general easier to work with than convex or strictly convex functions, since they are a smaller class. Like strictly convex functions, strongly convex functions have unique minima on compact sets.

Uniformly convex functions [edit]

A uniformly convex function,[14] [15] with modulus , is a function that, for all in the domain and satisfies

where is a function that is non-negative and vanishes only at 0. This is a generalization of the concept of strongly convex function; by taking we recover the definition of strong convexity.

It is worth noting that some authors require the modulus to be an increasing function,[15] but this condition is not required by all authors.[14]

Examples [edit]

Functions of one variable [edit]

- The function has , so f is a convex function. It is also strongly convex (and hence strictly convex too), with strong convexity constant 2.

- The function has , so f is a convex function. It is strictly convex, even though the second derivative is not strictly positive at all points. It is not strongly convex.

- The absolute value function is convex (as reflected in the triangle inequality), even though it does not have a derivative at the point It is not strictly convex.

- The function for is convex.

- The exponential function is convex. It is also strictly convex, since , but it is not strongly convex since the second derivative can be arbitrarily close to zero. More generally, the function is logarithmically convex if is a convex function. The term "superconvex" is sometimes used instead.[16]

- The function with domain [0,1] defined by for is convex; it is continuous on the open interval but not continuous at 0 and 1.

- The function has second derivative ; thus it is convex on the set where and concave on the set where

- Examples of functions that are monotonically increasing but not convex include and .

- Examples of functions that are convex but not monotonically increasing include and .

- The function has which is greater than 0 if so is convex on the interval . It is concave on the interval .

- The function with , is convex on the interval and convex on the interval , but not convex on the interval , because of the singularity at

Functions of n variables [edit]

See also [edit]

- Concave function

- Convex analysis

- Convex conjugate

- Convex curve

- Convex optimization

- Geodesic convexity

- Hahn–Banach theorem

- Hermite–Hadamard inequality

- Invex function

- Jensen's inequality

- K-convex function

- Kachurovskii's theorem, which relates convexity to monotonicity of the derivative

- Karamata's inequality

- Logarithmically convex function

- Pseudoconvex function

- Quasiconvex function

- Subderivative of a convex function

Notes [edit]

- ^ "Lecture Notes 2" (PDF). www.stat.cmu.edu . Retrieved 3 March 2017.

- ^ "Concave Upward and Downward". Archived from the original on 2013-12-18.

- ^ Stewart, James (2015). Calculus (8th ed.). Cengage Learning. pp. 223–224. ISBN978-1305266643.

- ^ W. Hamming, Richard (2012). Methods of Mathematics Applied to Calculus, Probability, and Statistics (illustrated ed.). Courier Corporation. p. 227. ISBN978-0-486-13887-9. Extract of page 227

- ^ Uvarov, Vasiliĭ Borisovich (1988). Mathematical Analysis. Mir Publishers. p. 126-127. ISBN978-5-03-000500-3.

- ^ Prügel-Bennett, Adam (2020). The Probability Companion for Engineering and Computer Science (illustrated ed.). Cambridge University Press. p. 160. ISBN978-1-108-48053-6. Extract of page 160

- ^ a b Boyd, Stephen P.; Vandenberghe, Lieven (2004). Convex Optimization (pdf). Cambridge University Press. ISBN978-0-521-83378-3 . Retrieved October 15, 2011.

- ^ Donoghue, William F. (1969). Distributions and Fourier Transforms. Academic Press. p. 12. ISBN9780122206504 . Retrieved August 29, 2012.

- ^ "If f is strictly convex in a convex set, show it has no more than 1 minimum". Math StackExchange. 21 Mar 2013. Retrieved 14 May 2016.

- ^ Altenberg, L., 2012. Resolvent positive linear operators exhibit the reduction phenomenon. Proceedings of the National Academy of Sciences, 109(10), pp.3705-3710.

- ^ Dimitri Bertsekas (2003). Convex Analysis and Optimization . Contributors: Angelia Nedic and Asuman E. Ozdaglar. Athena Scientific. p. 72. ISBN9781886529458.

- ^ Philippe G. Ciarlet (1989). Introduction to numerical linear algebra and optimisation. Cambridge University Press. ISBN9780521339841.

- ^ a b Yurii Nesterov (2004). Introductory Lectures on Convex Optimization: A Basic Course . Kluwer Academic Publishers. pp. 63–64. ISBN9781402075537.

- ^ a b C. Zalinescu (2002). Convex Analysis in General Vector Spaces. World Scientific. ISBN9812380671.

- ^ a b H. Bauschke and P. L. Combettes (2011). Convex Analysis and Monotone Operator Theory in Hilbert Spaces . Springer. p. 144. ISBN978-1-4419-9467-7.

- ^ Kingman, J. F. C. (1961). "A Convexity Property of Positive Matrices". The Quarterly Journal of Mathematics. 12: 283–284. doi:10.1093/qmath/12.1.283.

- ^ Cohen, J.E., 1981. Convexity of the dominant eigenvalue of an essentially nonnegative matrix. Proceedings of the American Mathematical Society, 81(4), pp.657-658.

References [edit]

- Bertsekas, Dimitri (2003). Convex Analysis and Optimization. Athena Scientific.

- Borwein, Jonathan, and Lewis, Adrian. (2000). Convex Analysis and Nonlinear Optimization. Springer.

- Donoghue, William F. (1969). Distributions and Fourier Transforms. Academic Press.

- Hiriart-Urruty, Jean-Baptiste, and Lemaréchal, Claude. (2004). Fundamentals of Convex analysis. Berlin: Springer.

- Krasnosel'skii M.A., Rutickii Ya.B. (1961). Convex Functions and Orlicz Spaces. Groningen: P.Noordhoff Ltd.

- Lauritzen, Niels (2013). Undergraduate Convexity. World Scientific Publishing.

- Luenberger, David (1984). Linear and Nonlinear Programming. Addison-Wesley.

- Luenberger, David (1969). Optimization by Vector Space Methods. Wiley & Sons.

- Rockafellar, R. T. (1970). Convex analysis. Princeton: Princeton University Press.

- Thomson, Brian (1994). Symmetric Properties of Real Functions. CRC Press.

- Zălinescu, C. (2002). Convex analysis in general vector spaces. River Edge, NJ: World Scientific Publishing Co., Inc. pp. xx+367. ISBN981-238-067-1. MR 1921556.

External links [edit]

- "Convex function (of a real variable)", Encyclopedia of Mathematics, EMS Press, 2001 [1994]

- "Convex function (of a complex variable)", Encyclopedia of Mathematics, EMS Press, 2001 [1994]

Source: https://en.wikipedia.org/wiki/Convex_function

![{\displaystyle [-\infty ,\infty ]=\mathbb {R} \cup \{\pm \infty \},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/79e74f824b5236ecd0cfa3470f6747931537cc1b)

![{\displaystyle f:X\to [-\infty ,\infty ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/cb5b80b60f448c0542dc59fd71f22b8ce01e8bc7)

![{\displaystyle [-\infty ,\infty ]=\mathbb {R} \cup \{\pm \infty \}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f784980f597dae36b4d32c2a89de0a449e99aca8)

![t\in [0,1],](https://wikimedia.org/api/rest_v1/media/math/render/svg/c327d1a07b1551a3ec5fc7bda0996d4ed770e462)

![{\displaystyle z\in \{tx+(1-t)y:t\in [0,1]\}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e4eda5a61d598018d743e10b2b4ae19dfa12a495)

0 Response to "Sum of Convex Continuously Differentiable Functions Does Not Have Minima"

Postar um comentário